Mastering for Streaming: Platform Loudness and Normalization Explained

Quick Answer

Mastering for streaming services has changed how we prepare masters for distribution. The process of loudness normalization ultimately alters the loudness of a streamed track, varies from platform to platform, and needs to be kept in mind when preparing a master for various streaming services.

Mastering for Streaming: Platform Loudness and Normalization Explained in Detail

We’ve discussed this topic on our site before, but understanding how streaming services operate it a bit of a moving target. What was true about these services one day may not be true the next.

With that in mind, we want to go into detail about multiple streaming services and how they’ll affect the loudness of your master.

The services we’ll cover include:

- Spotify

- YouTube

- Apple Music

- Tidal

- Amazon Music

- SoundCloud

- Bandcamp

- Netflix

We’ll also discuss some important topics that heavily relate to streaming services and normalization.

Knowing how to master for streaming services mean understanding how they affect your masters.

Some of the things we’ll define and discuss are:

- Loudness Normalization

- Integrated LUFS

- dB True Peak

- Dynamic Range

- ReplayGain

- Encoding

Before we get started if you have a mix that you’d like to hear mastered for a specific service, send it to us here:

We’ll master it for you and send you a free sample for you to review

What is Loudness Normalization?

Loudness normalization is the process of a computer program turning a track up or down prior to streaming; this is done to match a target loudness predetermined by the streaming service. Some streaming services will turn a track up and down, whereas others only turn it down if it's too loud.

A streaming service will alter the loudness of a master using clean gain reduction or amplification. This process doesn’t affect the dynamic range of a master.

If a master has incredibly loud peaks and a large dynamic range, some of these peaks may hit a limiter used by the streaming service - in that rare case, the dynamic range may be affected.

Let’s look at some examples to understand this better.

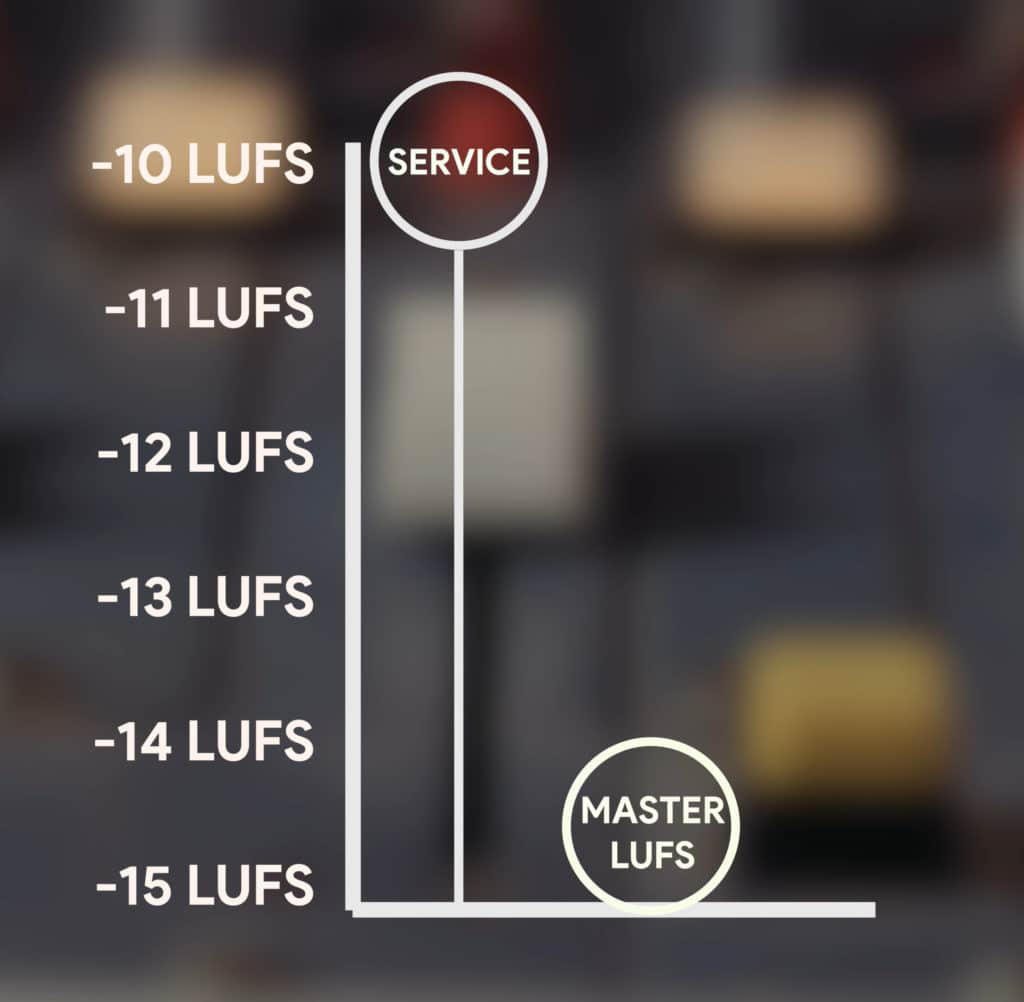

Notice that the Master is 5 LUFS quieter than the target loudness of the streaming service.

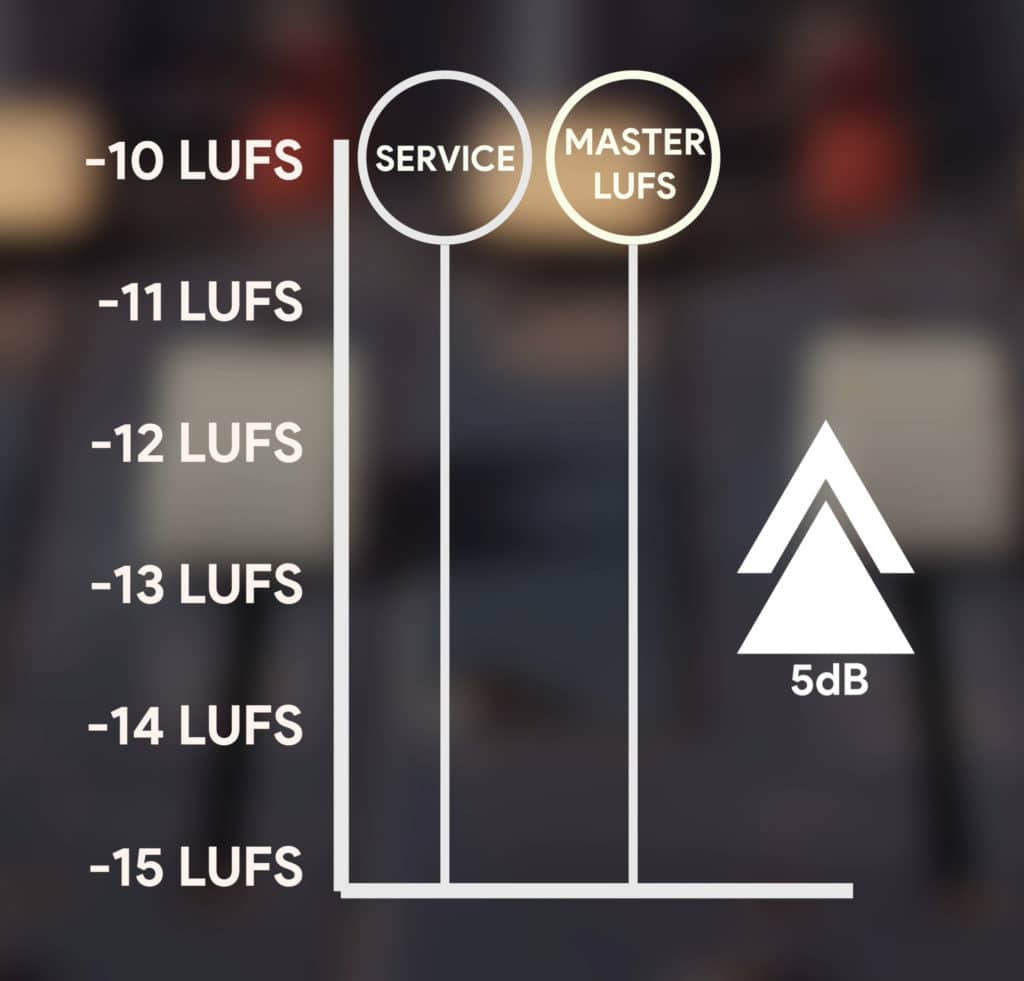

In this example the master is turned up 5dB.

If a streaming service normalizes audio to an integrated -10 LUFS, and a track has an integrated -15 LUFS, then the track will be turned up by 5dB.

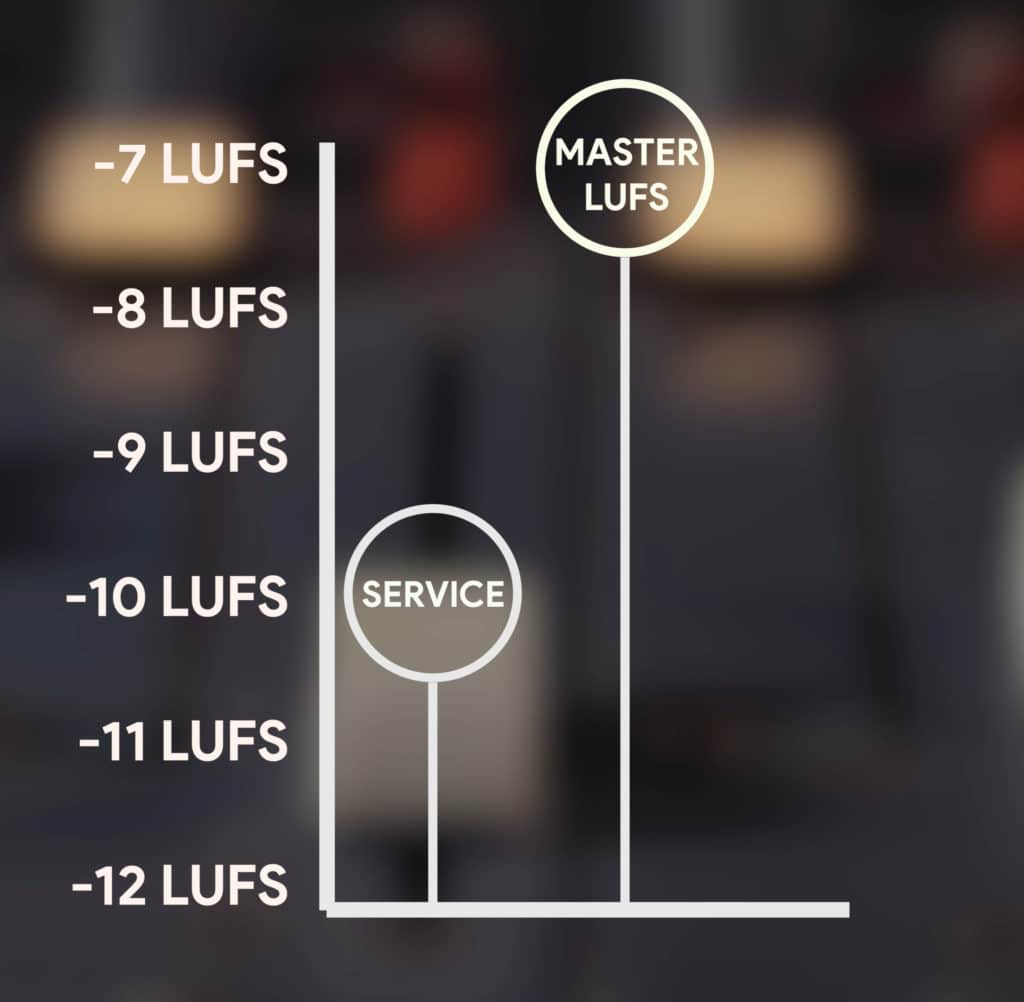

If this same streaming service received a track that has an integrated -7 LUFS, this track will be turned down 3dB.

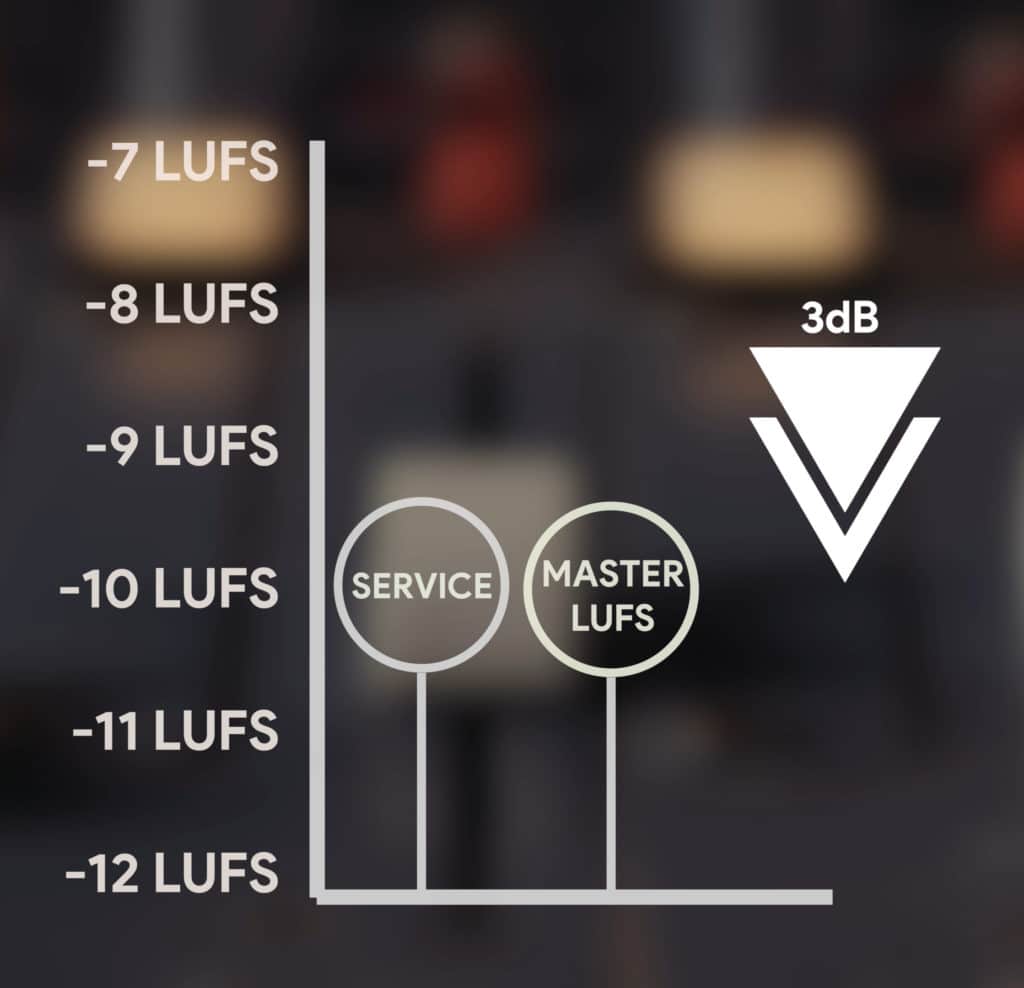

Notice that the master is 3 LUFS louder than the streaming service's target loudness.

In this example, the master will be turned down 3dB.

Let’s say a track has an integrated LUFS at -15 LUFS, and it’s loudest peak is at -3dBTP. If we’re using the same streaming service, the track will be turned up 5dB. What would this mean for the -3dB peak?

It too would have its gain increased by 5dB - this peak would hit the limiter and be attenuated 2dB, in turn reducing the track’s dynamic range.

None of this would make much sense if you didn’t know what an integrated LUFS is, so let’s look at that. Also, let’s look at some other important definitions.

If you’d like to learn more about audio mastering, or maybe, how to master your music, check out our blog post and video on the topic:

It's full of great information on audio mastering, and how you can create a great sounding master.

What is an Integrated LUFS?

An integrated LUFS is the measurement of a signal over time and offers a good indication of the perceived loudness of a track. An integrated LUFS is used by streaming services to determine how to alter a track’s gain, as a set integrated LUFS is programmed into the streaming service.

An increase of 1dB is almost equivalent to an increase of 1 LUFS

As we covered in the previous section, a track’s amplitude is altered in dB when it’s being normalized. A 1dB gain increase roughly translates to an increase of 1 LUFS.

What is dBTP or dB True Peak?

Whereas an integrated LUFS measures the perceived loudness of a signal, dBTP is used to measure the momentary peaks of a signal. dBTP takes inter-sample peaking into consideration, as it measures the signal between a digital recording’s samples - this makes it a more accurate form of peak measurement.

Streaming services measure the dBTP as well and introduce a limiter when the peaks approach 0dBTP. This ensures that loudness normalization does not cause clipping distortion.

What is a Dynamic Range and is it Affected by Loudness Normalization?

The dynamic range of a signal is the difference between it’s quietest level and it’s loudest level or its loudest peak. Loudness Normalization rarely affects the dynamic range, but if the signal being normalized is very dynamic, and is turned up enough, a peak may be limited during loudness normalization.

If you want to learn more about dynamics, especially how they relate to mastering music, check out our video and blog post on the topic:

We offer some easy to understand information about headroom, without getting too weighed down in technical info.

What is ReplayGain?

ReplayGain is a popular loudness normalization software and is used by Spotify to normalize the loudness of tracks streamed on their service. ReplayGain measures the RMS of a track prior to its streaming and applies to gain amplification or attenuation in accordance with its target integrated LUFS.

Sometimes, ReplayGain is used synonymously with the term Loudness Normalization, so if you come across it, just know that its software which normalizes the loudness of the audio.

How Does Encoding Affect the Loudness of a Master?

Although Loudness Normalization affects the loudness of a master more aggressively, the process of Encoding also affects the loudness of a master. Encoding can increase the amplitude of a master by up to 0.5dBTP, if not more in some circumstances - meaning it needs to be factored in when mastering.

Encoding can increase the volume of a master by 1dB.

How Does Loudness Normalization Happen on Different Streaming Services?

Loudness normalization occurs differently from streaming service to streaming service, as some have set a different target LUFS than other services - additionally, some use different measurement systems which may cause differences in playback levels from service to service. The audio codec used by these services will vary as well.

Now that we better understand some of the definitions relevant to loudness normalization, and that streaming services are different in how they process audio, it helps to understand the specifics of what Spotify, YouTube, and other streaming services do to a signal.

Let’s cover how they specifically affect a track prior to streaming.

How does Spotify use Loudness Normalization?

Spotify uses ReplayGain to measure the loudness of a signal - software which measures the RMS of the signal in 50ms increments and then applies clean gain to increase or decrease the integrated LUFS. The loudness which Spotify normalizes to is typically an integrated -14 LUFS.

Spotify uses ReplayGain to adjust it's tracks. The process measures the signal using RMS and breaks it up into 50ms increments.

Just as importantly, Spotify uses a lossy formant to stream your music (Ogg Vorbis file type) so the encoding process will change the amplitude of your master.

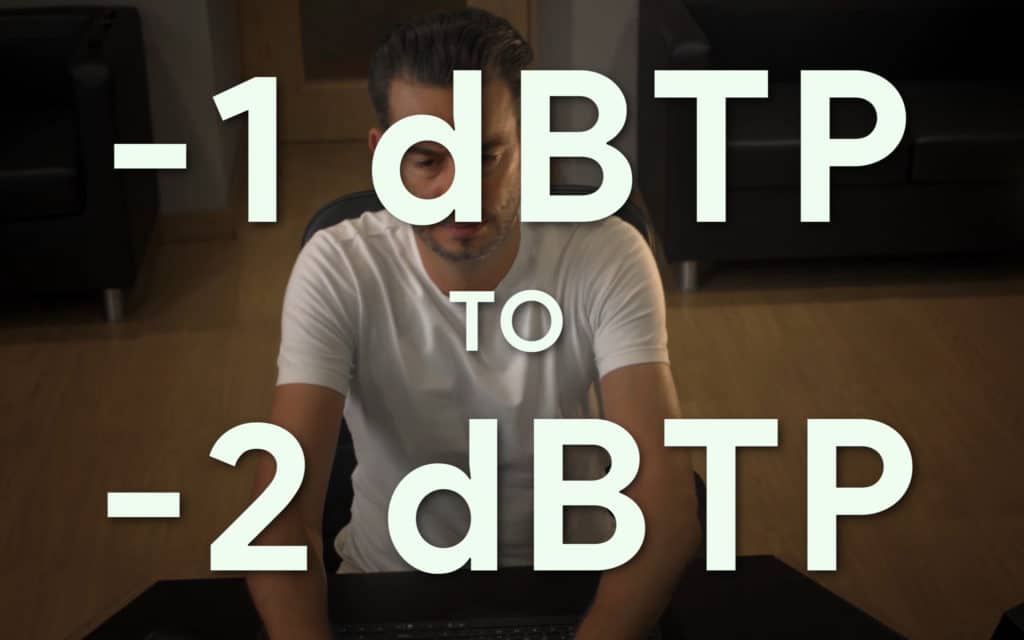

Spotify recommends having your master’s max peak set at -1dBTP.

Spotify's target loudness is -14 LUFS.

It also offers its listeners a loud option which normalizes the audio to an integrated -11 LUFS - Spotify recommends setting your max peak to -2dBTP when making a louder master, to avoid additional distortion.

If you're distributing music to Spotify, have the max peak set to -1dBTP.

Spotify will also turn down your master if it’s too loud - this won’t affect your dynamic range, but if you mastered you track loudly, then you may have sacrificed the dynamic range for loudness that won’t translate to the listener.

Because Spotify measures in RMS, it may adjust signals slightly different than services that measure in LUFS.

It should be noted that although Spotify used LUFS targets, it still measures the signal in RMS - meaning that some discrepancies may exist between Spotify and other streaming services that use -14 LUFS as a target.

Do you want to master your music for Spotify and other services, but you don’t have the right plugins for the job? Check out our video on the Top 10 Free Mastering Plugins

How does YouTube use Loudness Normalization?

Similar to Spotify, YouTube uses -14 LUFS as a target loudness - unlike Spotify, YouTube measures the signal using an integrated LUFS or method, not RMS. YouTube will only turn audio down if it is above an integrated -14 LUFS, but it will not turn quiet tracks up.

YouTube normalizes to -14 LUFS, but only turns tracks down if they're too loud.

YouTube uses a lossy format to stream audio (an AAC file format). this means that the encoding process will most likely alter the gain of your audio -leave 1 to 2dBTP of headroom to ensure that clipping distortion does not occur.

YouTube measures the signal in LUFS.

If you’d like to see how YouTube is affecting your music or video’s audio, you can right-click on your video (or anyone else’s video) and select ‘Stats for Nerds.’

It's best to leave 1 to 2dBTP of headroom when mastering for Spotify.

This will show you how much your audio is being turned down, or how far away in dB your audio is from an integrated -14 LUFS.

If you’re mastering dialogue, not music, and you’re curious if that affects how you should process your audio, take a look at our blog post and video on the topic:

In it, we detail what makes mastering for a podcast different than mastering music.

How does Apple Music use Loudness Normalization?

Apple Music uses their proprietary software known as Apple Soundcheck to measure the level of audio - although normalization needed to be enabled a couple of years ago, it is now the default setting for Apple Music users. Apple Music normalizes audio to an integrated -16 LUFS.

Apple Music normalizes to -16 LUFS.

Apple Music streams tracks using the AAC lossy format at 256 kbps. This means that distortion can occur during the encoding process, so it’s best to set the peak of your master to -1dBTP.

It streams music at 256kbps, so master with a lower max true peak.

Currently, Apple Sound Check turns music both up and down depending on its volume.

How does Tidal use Loudness Normalization?

Tidal normalizes audio to an integrated -14 LUFS but can be set to quieter -18 LUFS settings by listeners. At the time of writing this, Tidal does not turn up tracks on their streaming service - it only turns them down, meaning some tracks may sound quieter than others.

Tidal normalizes audio to -14 LUFS, but only turns audio down if its too loud.

Tidal offers multiple quality types for their playback:

- Normal - an undisclosed lossy format

- High - an AAC 320/kbps lossy format

- HiFi - a FLAC 44.1kHz, 16-bit file

- Master - a FLAC 96kHz, 24-bit file

It should be noted that the Master quality streaming can go up to a 352kHz sampling rate for some masters.

Tidal's highest settings offer studio quality playback.

Due to the higher quality, encoding distortion won’t be as much of an issue when mastering for Tidal.

Because of the higher quality encoding, a max peak of -0.5dBTP will prevent distortion.

Tidal also normalizes the loudness of tracks based on the overall loudness of the album these tracks are associated with. It does this for playlists as well.

if you have a mix that you’d like to hear mastered for a specific service, send it to us here:

We’ll master it for you and send you a free sample for you to review

How does Amazon Music use Loudness Normalization?

Amazon music normalizes audio to an integrated -13 LUFS, and like Tidal, it only turns audio down once it crosses that loudness, it does not turn it up if it’s quieter than it. Amazon music uses loudness normalization as its default listening setting, but it can be disabled by listeners.

Amazon Music normalizes to -13 LUFS

Amazon music uses the MP3 lossy codec at 320/kbps . Amazon also has an HD subscription option which increases the highest quality to 850/kbps.

Amazon HD uses a FLAC format. When mastering for Amazon Music, it’s best to leave 1 to 2dBTP of headroom to avoid distortion caused by distortion.

It's best to leave 2dBTP of headroom to avoiding clipping distortion during encoding.

How does SoundCloud use Loudness Normalization?

SoundCloud recently adopted loudness normalization as its default setting and normalizes audio to an integrated -14 LUFS, similar to many other popular streaming services. SoundCloud uses both Ogg Vorbis and AAC lossy formats during playback but does not disclose their kbps or quality or playback.

Soundcloud normalizes music to -14 LUFS.

If your track is quieter than -14 LUFS, SoundCloud recommends your max peak is less than -1dBTP. If your track is louder than -14 LUFS, SoundCloud recommends your max peak is less than -2dBTP.

Its lower quality makes it necessary to master with a lower peak.

How does Bandcamp use Loudness Normalization?

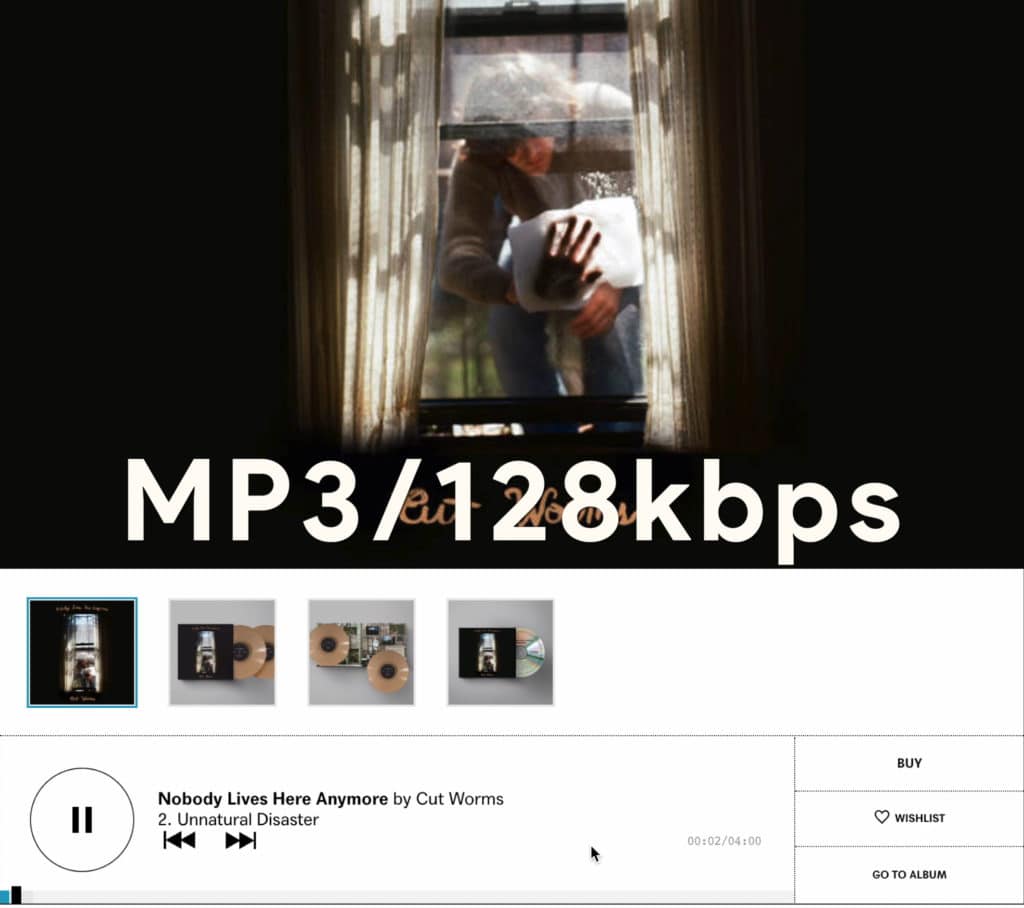

Supposedly, BandCamp does not normalize audio, nor they do not provide any information regarding their normalization practices at the time of writing this; however, they did not normalize audio in the past. Bandcamp streams music using an MP3 codec at 128/kbps, but its downloads average at about 250/kbps.

Bandcamp streams at very low quality.

Because Bandcamp streams music at such low quality, it’s important to leave 2dBTP of headroom, if not a little more to ensure clipping distortion does not occur.

It's best to master your signal peaking at -2dBTP.

How does Netflix use Loudness Normalization?

Netflix normalizes audio much differently than other audio streaming services - it only accepts files that are lower than an integrated -27 LUFS, with a max peak of -2dBTP. Netflix will not accept any videos with audio louder than these 2 metrics, meaning it also normalizes audio upward.

Netflix’s audio codec settings are incredibly complex and depend on the video format used, the region it’s streamed in, and the device it’s being streamed on.

Regardless of the audio codec, you cannot make the audio have peaks higher than -2dBTP.

Conclusion

Each streaming service is unique and introduces different settings when affecting your audio.

Here is a helpful list of each streaming service, the integrated LUFS they target, a good max dBTP for the platform, and the audio codec they use to when streaming audio.

Spotify: -14 LUFS, -1dBTP (for quieter masters) -2dBTP for louder masters, Ogg Vorbis

YouTube: -14 LUFS, -2dBTP, AAC/256kbps

Apple Music: -16 LUFS, -1dBTP, AAC/256kbps

Tidal: -14 LUFS, -1dBTP (for quieter masters) -2dBTP (for louder masters), As low as AAC/256kbps : As high as FLAC 352kHz Sampling rate, 24-bit.

Amazon Music: -13 LUFS, -1dBTP (for quieter masters) -2dBTP (for louder masters), MP3/320kbps

SoundCloud: -14 LUFS, -1dBTP (for quieter masters) -2dBTP (for louder masters), Ogg Vorbis & AAC (undisclosed kbps)

Bandcamp: No Normalization Currently, -2dBTP, Streaming: MP3 128kbps / Downloaded: MP3 250/kbps average.

Use this chart when you’re confused about how loud to make your music. Also, keep in mind that these figures may change with time, and will definitely be the last alteration these streaming services make to their formats and streaming quality.

Furthermore, although these normalization settings exist, they should not dictate how you master. Although it’s easy to master to an integrated -14 LUFS, don’t feel as if this is the only loudness you can/should master to.

Make your masters as loud as you or your clients want (within reason) and in a way that best suits the recording and genre.

If you have a mix that you’d like to hear mastered for a specific service, and you’re not sure of how loud to make it, send it to us here:

We’ll master it for you and send you a free sample for you to review

Have you mastered music for any of these services before?